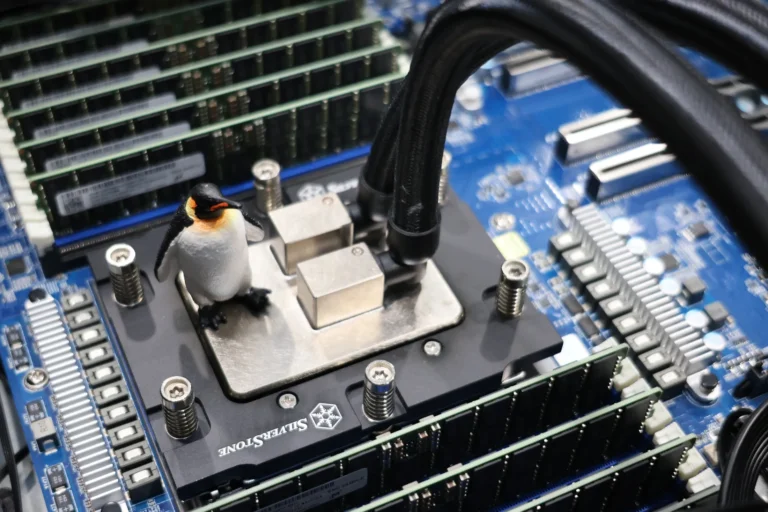

Initial benchmarking of the Linux 7.0 kernel on the Core Ultra X7 "Panther Lake" platform revealed performance regressions. In contrast, testing on an AMD EPYC Turin server showed no regressions and highlighted significant performance enhancements for PostgreSQL database operations. The benchmarks compared Linux 6.19 and Linux 7.0 Git, using an AMD EPYC 9755 single-socket setup on a Gigabyte MZ33-AR1 server. The upgrade to Linux 7.0 resulted in modest improvements for CockroachDB and notable enhancements in PostgreSQL 18.1 for read and write operations. Performance for in-memory databases like Memcached and Pogocache remained unchanged, while slight improvements were observed for the Nginx HTTPS web server and the Open Image Denoise library. The Panther Lake tests had shown increased context switching times, which were not replicated in the AMD EPYC Turin tests. Both platforms indicated enhancements in kernel message passing performance and improvements in socket activity and pthread performance. Ongoing benchmarking will continue as the Linux 7.0 merge window approaches its conclusion.