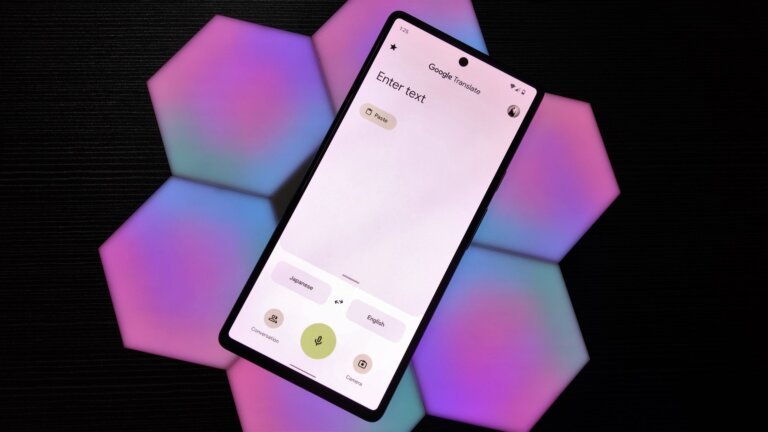

Google Translate’s new AI-powered Advanced mode can engage in conversation rather than just translating text due to "prompt injection," which causes the model to struggle with distinguishing between translation requests and instructions. Users have found that this mode, based on a Gemini-based large language model, can respond to inquiries rather than providing straightforward translations. The older Classic mode remains a reliable option for consistent translations without unexpected interactions.