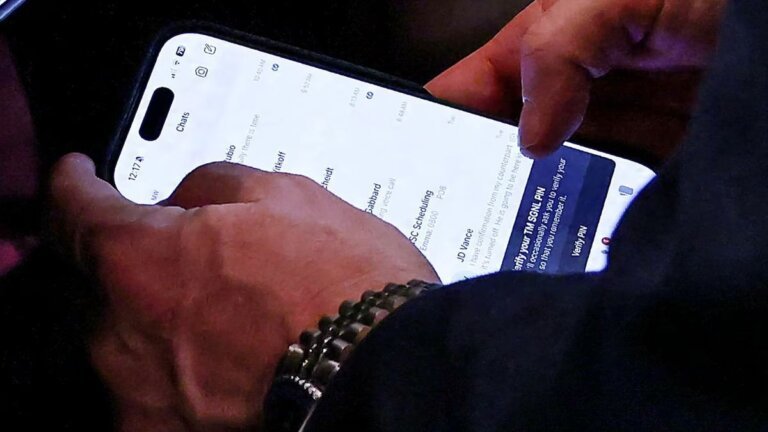

Meta has retired its standalone Messenger website, redirecting users to Facebook’s integrated Messages interface. Users can no longer send or receive messages through the Messenger site and must use facebook.com/messages instead. The mobile Messenger app remains unaffected. Those who used Messenger without a Facebook account will find their desktop access eliminated, requiring them to use the mobile app. Users with end-to-end encrypted chats must manage their secure storage PIN for restoring messages. Meta encourages users to export their data before the transition, aiming to streamline Messenger functionalities and reduce support costs. The closure was first reported by reverse engineer Alessandro Paluzzi and further covered by TechCrunch, with Meta notifying users of the change.