Your text messages and calls can be intercepted by hackers, corporations, and government entities, putting your private information at risk. Corporations may use your messages for targeted advertising or sell your data, while hackers may exploit it for identity theft or fraud. Governments may monitor communications under the guise of national security. Secure messaging apps are essential to protect your communications, as many default options expose sensitive information through metadata or lack proper security.

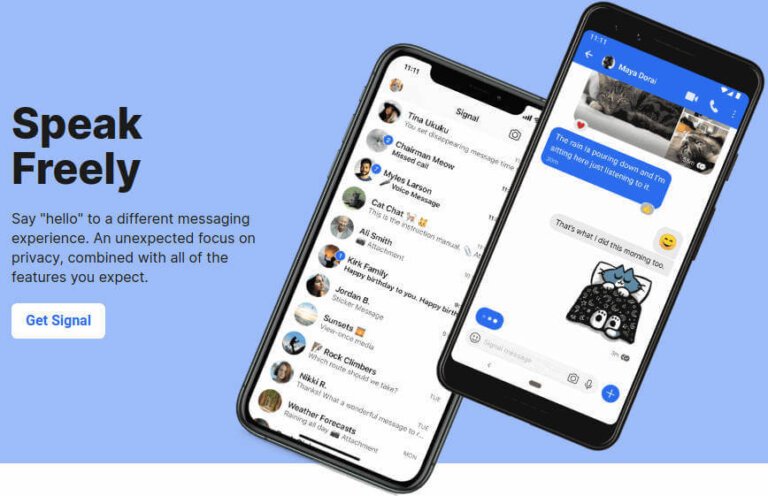

Signal is recognized as the most secure messaging app, offering end-to-end encryption, being open-source, and free of charge, though it requires a phone number for registration. Threema allows complete anonymity without data collection but has no free version. Telegram, while popular, has security concerns as not all communications are end-to-end encrypted by default. WhatsApp and Keybase are discouraged due to ownership issues affecting privacy. Characteristics to look for in a secure messaging app include end-to-end encryption, third-party testing, open-source code, self-destructing messages, and minimal user data collection.