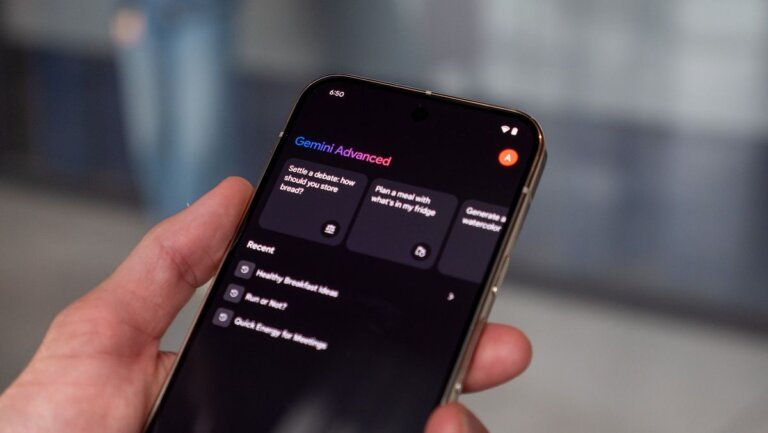

Google has introduced a new feature in the Gemini app that allows users to convert still images into dynamic videos using Veo 3 technology. Subscribers of Google AI Pro or Ultra can upload a photo and provide a text description for the video output, including specifying soundscapes. Earlier, Google Cloud launched a similar feature for Honor's 400 series with Veo 2, which required a subscription after a trial period. Veo 3 was highlighted at the I/O 2025 event for its audio capabilities and lifelike video generation, and YouTube is considering integrating it into its Shorts platform. Google has also stated that it has implemented measures to ensure user safety with these AI tools.