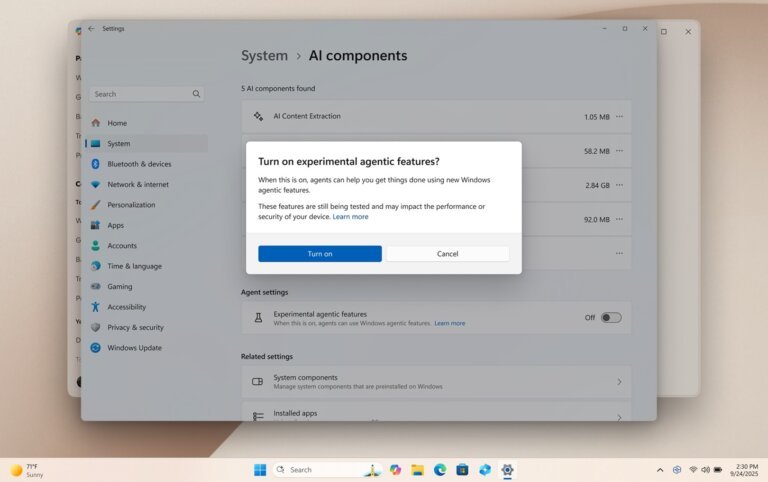

Microsoft is introducing the "Copilot+ PC," a new category of Windows PCs that run on Windows 11 but have specific hardware requirements, including up to 16GB of RAM, a 256GB SSD, and a Neural Processing Unit (NPU) capable of 40+ TOPs. The NPU is crucial for AI and machine learning tasks, allowing for on-device AI processing. Not all Windows 11 devices qualify as Copilot+ PCs, particularly older models with lower NPU capabilities. Microsoft claims that Copilot+ PCs enhance productivity and creativity with features like a dedicated Copilot key, Recall for screen activity, Live Captions in over 40 languages, image generation in Paint, and tools in Microsoft Photos for editing. Despite the focus on AI, some manufacturers are shifting their attention to other features such as build quality and gaming performance.