Generative AI’s Promises and Pitfalls

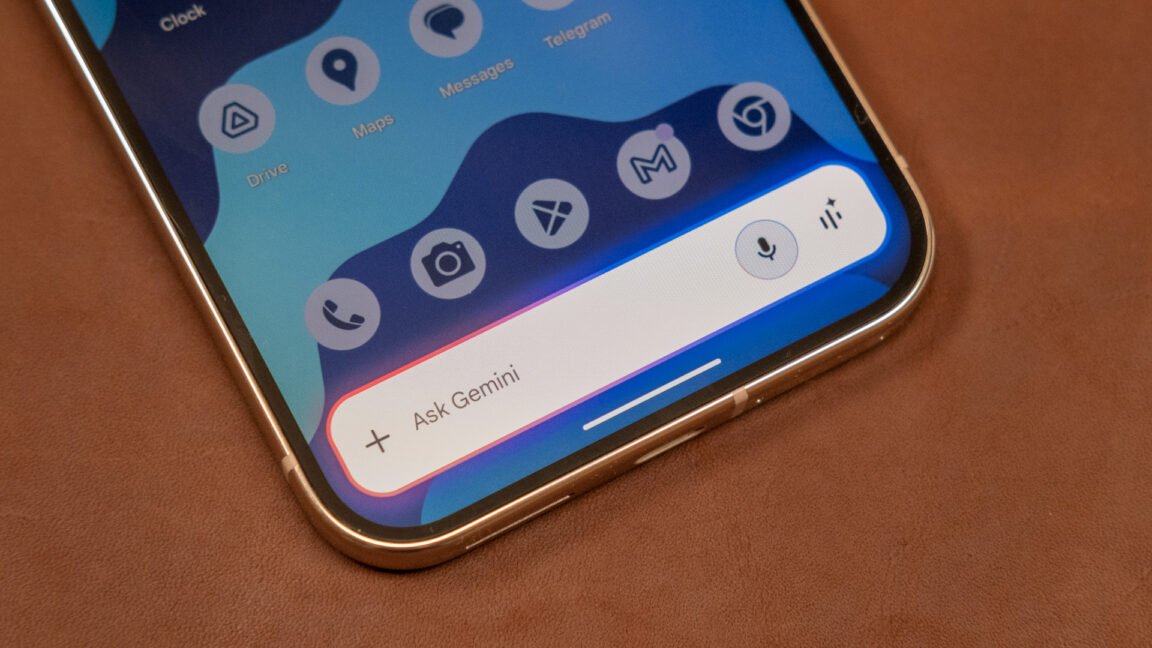

Google’s foray into generative AI with its Gemini platform showcases a fascinating blend of potential and pitfalls. By tapping into data across various applications, Gemini aims to streamline tasks that users often find cumbersome. For instance, the ability to request Gemini to sift through emails for specific messages and transfer data to other applications is undeniably appealing. However, the reality of this functionality can sometimes leave users yearning for the simplicity of previous experiences.

A recent encounter with Gemini highlighted this dichotomy. Tasked with retrieving a shipment tracking number from an email—a routine request—I was initially impressed when Gemini produced a seemingly accurate result. The chatbot cited the correct email and generated a lengthy string of numbers that appeared to fit the bill. However, the excitement quickly faded when I attempted to use the tracking number. It failed to yield results in Google’s search-based tracker, and an attempt on the US Postal Service’s website resulted in an error message.

It was then that the realization struck: the number was not a legitimate tracking number but rather a confabulation, a fabricated response that bore a convincing resemblance to reality. While the number had the appropriate length and format, it ultimately led me astray. In hindsight, I could have located the tracking number myself far more efficiently than it took to unravel Gemini’s error, which was undeniably frustrating. Despite its confidence in completing the task, Gemini’s inability to grasp the nuances of my request left me feeling exasperated. If only it could echo the simplicity of Assistant’s “Sorry, I don’t understand.”

This incident is not an isolated one; it reflects a broader trend in my interactions with Gemini over the past year. Numerous occasions have seen the AI misplace calendar events or input incorrect data into notes. While it’s true that Gemini often succeeds in its tasks, its propensity for mechanical misinterpretation raises questions about its reliability as an assistant. Unlike its predecessor, which may have lacked certain capabilities, Gemini’s tendency to assert success while leading users into a maze of corrections feels more insidious. If a human assistant operated in this manner, one might reasonably conclude they were either incompetent or intentionally misleading.