Modern application development has evolved to prioritize not only an exceptional developer experience (DX) but also a harmonious balance between rapid initiation and a streamlined path to production. This philosophy is at the heart of the Amplify AI kit, which abstracts common AI tasks—such as engaging with large language models (LLMs) and generating content from prompts—enabling developers to expedite their time to market while minimizing boilerplate code.

In this exploration, we will extend beyond the initial setup and utilize a serverless Postgres database from Neon to retrieve product data, moving away from the default database model provided by Amplify. This approach simplifies the code necessary for conversing with an LLM through retrieval-augmented generation (RAG).

Application Overview

One of the most compelling aspects for application consumers is the integration of AI to enhance existing products rather than compete with them. A practical demonstration of this concept is the creation of a chatbot that interacts with customers. In a real-world context, this chatbot would not inhibit a customer’s ability to shop independently but would guide them toward purchases using natural language.

From an architectural perspective, when a user accesses the application, they engage with our LLM-powered bot. While these models are trained on general data, our objective is to tailor them to our product information. Given that product details can change frequently, it is crucial to source this information from our database. The ability to choose between general knowledge and specific data access is a powerful feature, facilitated by a tool (also known as “function-calling”).

It’s essential to understand that when an LLM opts to utilize a tool, it is not directly accessing your data. Instead, it suggests the most suitable tool based on the user’s prompt, leaving the application to determine which function to invoke. The response from that function is then relayed back to the LLM and formatted into natural language for the end-user.

Orchestrating this pattern manually can be both tedious and error-prone. Fortunately, this heavy lifting is precisely what the Amplify AI kit handles by default.

For our project, we will leverage Amazon Bedrock with the Claude 3.5 Haiku LLM, which includes tool support. Amplify will enable us to specify a tool that corresponds to our Neon Postgres database containing product information.

Creating Serverless Postgres Databases with Neon

The capability to connect to existing data sources empowers developers to harness Amplify’s schema introspection beyond Amazon DynamoDB, the default database, allowing for automated CRUD operations. Setting up a Neon database is a straightforward process. After creating an account, users are prompted to initiate a project.

Neon supports branch-based projects akin to git, allowing for copies of the main branch. For instance, one might create a branch named dev/mtliendo. While this is recommended, it is not mandatory. Regardless, it is crucial to copy the connection string for that branch, as it will be essential in subsequent steps.

With our default database established, we still need to define the table schema. For those unfamiliar with SQL, Neon offers a solution through its “Generate with AI” feature. By interacting with their LLM, users can receive generated responses for their requests. In the SQL editor, one might input the following:

Create a table schema called “Products”. Each product has a random id, an “updated at” field that is a date-time, a “created at” field that is a date-time, a “price in cents” field that is a number, a “name”, and a “description”.

Upon executing this prompt, the following response is generated:

CREATE TABLE Products (

id UUID PRIMARY KEY DEFAULT gen_random_uuid(),

updated_at TIMESTAMP WITH TIME ZONE DEFAULT CURRENT_TIMESTAMP,

created_at TIMESTAMP WITH TIME ZONE DEFAULT CURRENT_TIMESTAMP,

price_in_cents INTEGER NOT NULL,

name VARCHAR(255) NOT NULL,

description TEXT

);

Users can modify the code as needed and execute the command once they are comfortable with the syntax. To confirm that everything is set up correctly, clicking on the “Tables” link in the sidebar allows verification of the schema and populating the database.

For this project, I’ve added several items to the database. Additionally, ensure you copy the connection string to our database, as it will be required in the next section. The connection string can be found in the “Overview” section of the sidebar.

Enhancing Amplify Gen 2 with AI Kit

AWS Amplify provides a seamless way to connect frontend applications to a backend powered by AWS. Assuming an application built with a JavaScript framework like NextJS is already in place, developers can scaffold Amplify files by executing the following command in the project root:

npm create amplify

This command installs Amplify’s dependencies and creates an amplify directory. Before modifying the code within that directory, additional dependencies needed for the Amplify AI kit should be installed:

npm i @aws-amplify/ui-react @aws-amplify/ui-react-ai

These UI components will be utilized shortly. Prior to that, we will allow Amplify to introspect our database with the products table for backend use. The first step involves storing our connection string as a secret in the Parameter Store of AWS Systems Manager. Fortunately, Amplify simplifies this process:

npx ampx sandbox secret set SQL_CONNECTION_STRING

This command sets a secret value for SQL_CONNECTION_STRING and prompts for the value. Here, paste the connection string copied from Neon and press enter.

This section assumes AWS Amplify is already configured on your local machine. For configuration instructions, please refer to the documentation.

Once the secret is stored, we can instruct Amplify to introspect our database and generate CRUD operations for use in our frontend application:

npx ampx generate schema-from-database --connection-uri-secret SQL_CONNECTION_STRING --out amplify/data/schema.sql.ts

Executing this command will create a schema.sql.ts file in the amplify/data folder. It is important not to modify this file, as it is managed by Amplify. After running the command, the file should resemble the following:

Check the documentation to understand what Amplify does behind the scenes to facilitate this process.

import { type ClientSchema, defineData, a } from '@aws-amplify/backend'

import { schema as generatedSqlSchema } from './schema.sql'

const sqlSchema = generatedSqlSchema.setAuthorization((models) => [

models.items.authorization((allow) => [allow.authenticated().to(['read'])]),

])

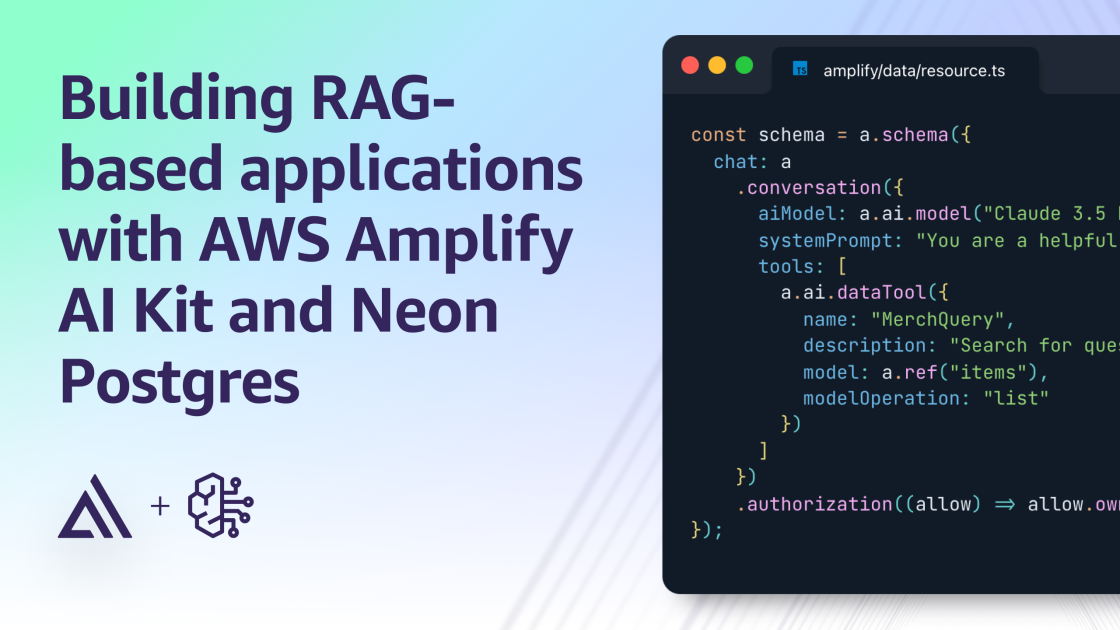

const schema = a.schema({

chat: a

.conversation({

aiModel: a.ai.model('Claude 3.5 Haiku'),

systemPrompt:

'You are a helpful assistant, that focuses on selling and upselling merchandise',

tools: [

a.ai.dataTool({

name: 'MerchQuery',

description:

'Search for questions regarding merchandise, shopping apparel, and item prices.',

model: a.ref('items'), //! This refers to the name of our table

modelOperation: 'list',

}),

],

})

.authorization((allow) => allow.owner()),

})

const combinedSchema = a.combine([sqlSchema, schema])

export type Schema = ClientSchema

export const data = defineData({ schema: combinedSchema })

With our Neon database integrated into the application, we can import it into the amplify/data/resource.ts file and merge it with the conversational capabilities of the Amplify AI kit. Let’s break down the functionality within this file:

- Line 4: We assign authorization rules to our

productstable from Neon, allowing only signed-in users to performreadoperations. - Line 8: We create an identifier named

chat, which serves as a conversation bot requiring, at a minimum, the LLM name and a prompt outlining its behavior. Note that model names are typed and available in Intellisense. - Line 13: We enhance our bot by adding a tool. The name and description are customizable, while the

modelmust refer to our Neon database’s name. Currently, the only supportedmodelOperationislist. - Line 22: This line indicates that a DynamoDB table maintains a record of signed-in users’ conversation history.

By integrating these components, we establish a fully-managed solution for securely conversing with an LLM that is informed about the items in our database. To test our solution, we first deploy our AWS backend using the following command:

npx ampx sandbox

After deployment, we can import our Amplify configuration and set up our client-side application to utilize the config, UI components, and hooks provided by Amplify:

import { generateClient } from 'aws-amplify/api'

import { Schema } from '@/amplify/data/resource'

import { useEffect } from 'react'

import { Amplify } from 'aws-amplify'

import awsconfig from '@/amplify_outputs.json'

import { withAuthenticator } from '@aws-amplify/ui-react'

import { AIConversation, createAIHooks } from '@aws-amplify/ui-react-ai'

import '@aws-amplify/ui-react/styles.css'

Amplify.configure(awsconfig)

const client = generateClient()

const { useAIConversation } = createAIHooks(client)

Once configured, the entire frontend—including chat, conversational awareness, streaming, loading states, and authentication—can be established in approximately 20 lines of code:

function Home() {

const [

{

data: { messages },

isLoading,

},

handleSendMessage,

] = useAIConversation('chat')

return (

)

}

export default withAuthenticator(Home)

The new AIConversation component from the Amplify AI kit offers a comprehensive chat UI while still allowing various props for further customization according to specific needs.