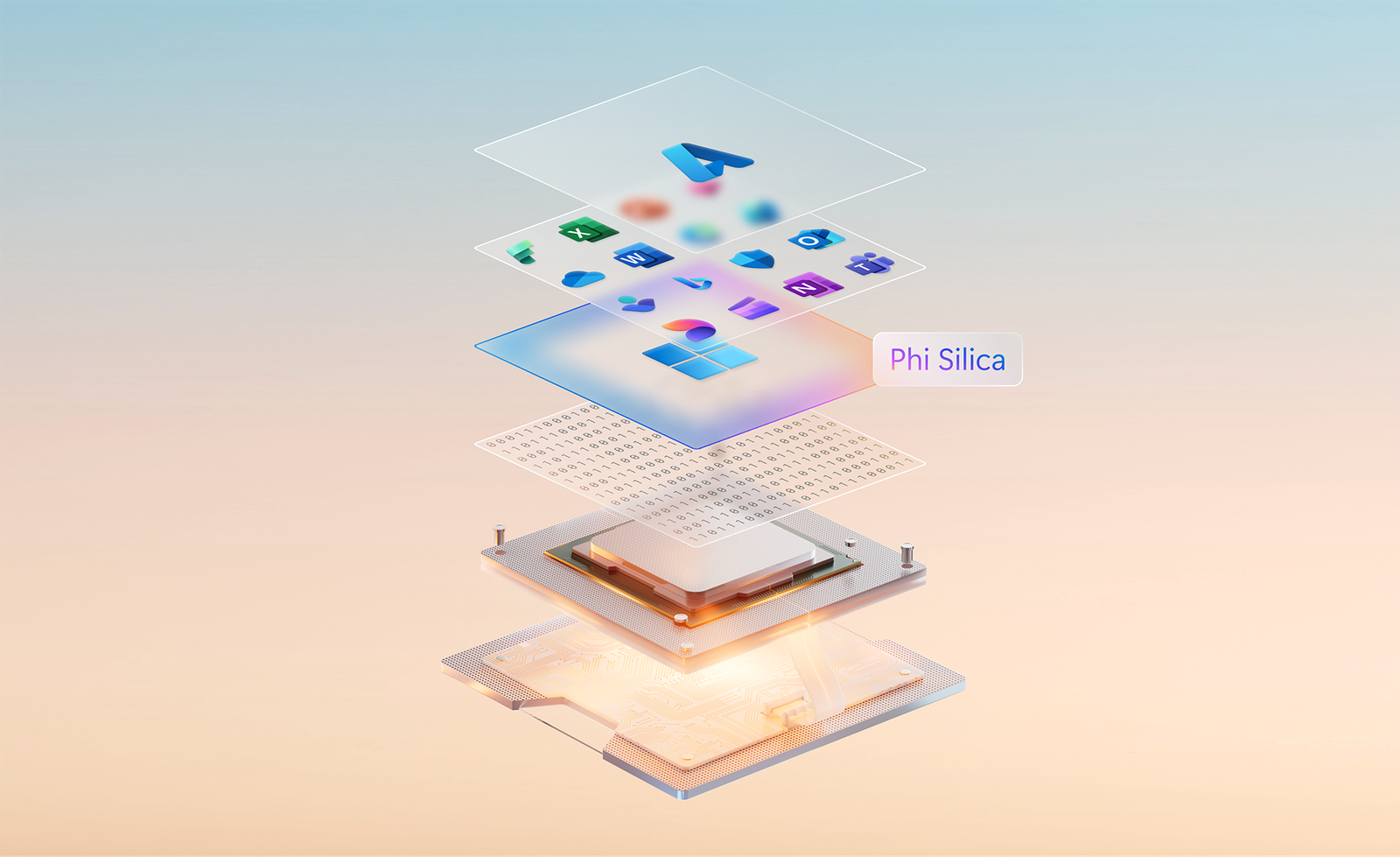

This blog marks the beginning of a series that delves into the innovative advancements in AI technology on Windows. The Applied Sciences team has employed a multi-disciplinary approach to enhance power efficiency, inference speed, and memory efficiency in the cutting-edge small language model (SLM), Phi Silica. This model is integrated into Windows 11 Copilot+ PCs, starting with the Snapdragon X Series, and powers various features in the latest Copilot+ PC experiences, including Click to Do (Preview), on-device rewrite and summarize capabilities in Word and Outlook, along with a turnkey pre-optimized SLM for developers.

Background

In May, Microsoft unveiled Copilot+ PCs, equipped with a Neural Processing Unit (NPU) capable of executing over 40 trillion operations per second (TOPS). Alongside this announcement, Phi Silica was introduced as the new on-device SLM compatible with Snapdragon X Series NPUs. Phi Silica is a sibling to the Phi models and leverages the NPU’s capabilities on Copilot+ PCs. At the Ignite conference in November, it was announced that developers would have access to the Phi Silica API starting January 2025, enabling them to integrate language intelligence into their applications without the need for extensive model optimization or customization, as Phi Silica is pre-tuned and included by default.

These NPU devices, boasting all-day battery life, can sustain AI workloads over extended periods with minimal impact on system performance and resources. When connected to the cloud, Copilot+ PCs achieve unprecedented performance levels—up to 20 times more powerful and 100 times more efficient for running AI workloads compared to traditional setups, all while occupying a smaller footprint than GPUs in terms of TOPS/Watt/dollar. The NPUs can handle AI workloads that demonstrate emergent behavior, allowing users to make limitless low-latency queries without incurring additional subscription fees. This represents a significant shift in computing capabilities, enabling powerful reasoning agents to function as part of the background operating system services, fostering innovation across various applications and services.

Original Floating-Point Model

Phi Silica is built on a Cyber-EO compliant derivative of Phi-3.5-mini, specifically designed for Windows 11. It features a 4k context length and supports multiple languages, including major Tier 1 languages such as English, Chinese (Simplified), French, German, Italian, Japanese, Portuguese, and Spanish, incorporating essential enhancements for in-product experiences.

A language model like Phi comprises several components:

- The tokenizer: This component breaks down input text into smaller units and maps them to an index based on a predefined vocabulary, establishing a connection between human language and model language.

- The detokenizer: This performs the reverse operation of the tokenizer.

- The embedding model: It transforms each discrete input token ID into a continuous, higher-dimensional vector that captures semantic information, encoding the context and meaning of the text.

- The transformer block: This transforms incoming vectors into output vectors that indicate the next token in the sequence.

- The language model head: It computes the most likely token based on the output vectors.

Generating a response involves two distinct phases of the transformer block:

- Context processing: The model processes input tokens to compute the key-value (KV) cache and generate hidden states, requiring intense parallel computation and high computational power.

- Token iteration: Tokens are generated one by one, with each new token becoming part of the extended context for predicting the next one. Generation concludes when an end token is produced or a user-defined condition is met.

Executing these stages for SLMs like Phi, which contain billions of parameters, can strain device resources. The context processing stage demands substantial computational resources, impacting the CPU and running applications, while the token iteration stage requires significant memory for storing and accessing the KV cache. Efficient memory access is crucial for maintaining performance, as memory constraints can complicate token generation.

NPUs in Copilot+ PCs are designed for power efficiency, executing several TOPS within a single-digit Watt range. On devices with Snapdragon X Elite processors, Phi Silica’s context processing consumes only 4.8mWh of energy on the NPU, while the token iterator shows a 56% improvement in power consumption compared to CPU operation. This allows Phi Silica to run on devices without overburdening the CPU and GPU, ensuring efficient memory and power consumption while enabling seamless operation with minimal impact on primary applications.

Creating Phi Silica

Given the size of the original floating-point model and the memory limitations of the target hardware, along with desired performance metrics in speed, memory usage, and power efficiency, Phi Silica was designed with specific characteristics:

- 4-bit weight quantization for high speed and a low memory footprint during inference.

- Low idle memory consumption to support pinned memory and eliminate initialization costs.

- Rapid time to first token for shorter prompts to enhance interactivity.

- A context length of 2k or greater for real-world usability.

- NPU-based operation for sustained power efficiency.

- High accuracy across multiple languages.

- Small model disk size for efficient distribution at Windows scale.

With these goals in mind, Phi Silica was developed to push the boundaries of current capabilities across various levels of the stack, including post-training quantization, efficient resource utilization in inference software, and targeted silicon-specific optimizations. The resulting model delivers:

- Time to first token: 230ms for short prompts.

- Throughput rate: Up to 20 tokens/s.

- Context length: 2k (with support for 4k coming soon).

- Sustained NPU-based context processing and token iteration.

Post-Training Quantization

To achieve true low-precision inference by quantizing both weights and activations, Microsoft collaborated with academic researchers to develop QuaRot: Outlier-Free 4-Bit Inference in Rotated LLMs. QuaRot serves as a pre-quantization modifier, enabling end-to-end quantization of language models down to 4-bits. By rotating language models to eliminate outliers from the hidden state without affecting output, QuaRot facilitates high-quality quantization at lower bit-widths.

Two fundamental concepts underpin the ingenuity of QuaRot:

- Incoherence processing: Previous weight-only quantization methods struggled with high incoherence in weight matrices. QuaRot reduces this incoherence by rotating matrices, easing the quantization process, albeit at an increased computational cost.

- Computational invariance: QuaRot extends the idea of computational invariance, ensuring that transformations to weight matrices do not alter outputs. By employing random Hadamard transforms, QuaRot minimizes computational overhead, allowing for easier activation quantization.

This results in an equivalent network in a rotated space, enabling the quantization of activations, weights, and KV cache to 4-bits with minimal accuracy loss.

Realizing Gains from a 4-Bit Model

The 4-bit weight quantization optimized memory usage, but adapting QuaRot for 4-bit quantized weight model inference on an NPU required several adjustments due to specific quantization support in the software stack. The final 4-bit Phi Silica model includes:

- Rotated network: Conversion of the LayerNorm transformer network into an RMS-Norm transformer network using fused Hadamard rotations.

- Embedding layer: A fused one-time ingoing QuaRot rotation.

- Activations: Asymmetric per-tensor round-to-nearest quantization to unsigned 16-bit integers from ONNX.

- Weights: Symmetric per-channel quantization to 4-bit integers from QuaRot with GPTQ, integrated into the rotated network.

- Linear layers: Conversion of all linear layers into 1×1 convolutional layers to enhance latency on the current NPU stack.

- Selective mixed precision: Identifying quantized weights with larger reconstruction errors and selectively applying 8-bit quantization to maintain a compact model size.

- Language model head: A fused one-time outgoing QuaRot de-rotation, with 4-bit block-wise quantization to keep memory usage low.

Benchmark results indicate that QuaRot significantly improves quantization accuracy compared to traditional methods, particularly in low granularity settings.

Improving Memory Efficiency

To maintain Phi Silica in memory for sustained inference, optimizing memory usage was essential. Key techniques included:

- Weight sharing: The context processor and token iterator share quantized weights and activation quantization parameters, halving memory usage and accelerating model initialization.

- Memory-mapped embeddings: Implementing the embedding layer as a lookup table using a memory-mapped file effectively reduced the dynamic memory footprint to zero.

- Disabling arena allocator: Disabling the default arena allocator in ONNX Runtime improved overall memory efficiency by reducing excessive pre-allocation.

These changes, combined with the 4-bit quantized model, led to a ~60% reduction in memory usage.

Expanding the Context Length

To enable real-world applications, expanding the context length was crucial. Two key innovations were developed:

Sliding window: Processing prompts in smaller chunks reduces the effective sequence length while maintaining the overall context length. This allows for faster processing of shorter prompts without sacrificing speed on longer ones.

Dynamic and shared KV cache: By splitting context processing across the NPU and CPU, a dynamic-sized read-write KV cache was enabled, improving memory efficiency and runtime latency significantly.

Safety Alignment, Responsible AI, and Content Moderation

The floating-point model from which Phi Silica is derived has undergone safety alignment using a five-stage ‘break-fix’ methodology. The Phi Silica model, system design, and API are subject to Responsible AI impact assessments and deployment safety board reviews. Local content moderation is available in the Phi Silica developer API, ensuring a commitment to safe and responsible AI deployment.