Google has unveiled a series of significant updates to its Gemini app, aimed at enhancing its functionality and user experience. The latest announcement, made on March 13, introduces the 2.0 Flash Thinking Experimental model, which is set to expand Gemini’s capabilities, allowing it to tackle complex queries with greater efficiency.

What you need to know

- Google’s massive announcement involves bolstering the usefulness and complex query-solving capabilities of the Gemini app.

- The post highlights a 2.0 Flash Thinking Experimental model upgrade, which aims to enhance Gemini, as well as bring new features.

- The Gemini app picks up a new “personalization” experimental feature, which lets the AI scour your Google app/service history for “tailored” responses.

- Google is also letting users try out its latest Deep Research updates in Gemini, but only for a limited time a month.

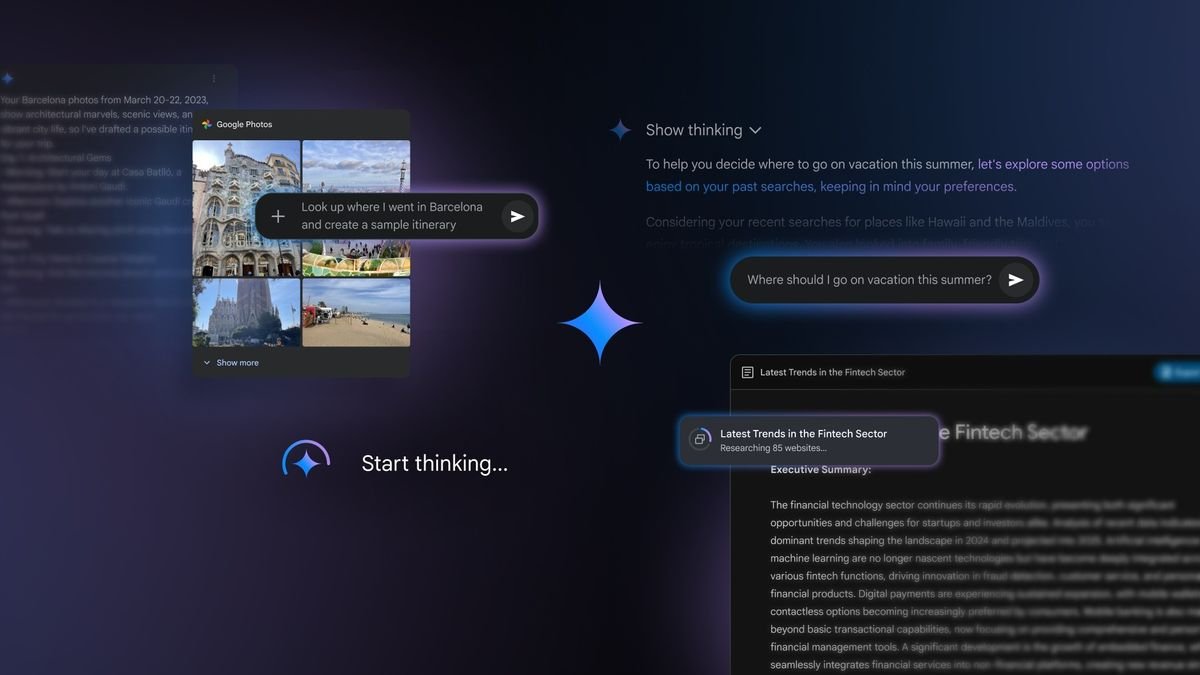

This upgrade introduces a range of new features, including the ability for users to upload files directly into the app. More importantly, the enhanced model allows Gemini to deconstruct prompts into manageable steps, leading to more precise and relevant responses. Users can expect a more personalized experience as Google rolls out an experimental feature called “personalization.” This feature utilizes the upgraded model to connect Gemini with various Google apps and services, tailoring responses based on individual user behavior.

For instance, if a user searches for restaurant recommendations, Gemini will delve into their search history to provide food-related suggestions. Users can activate this personalization feature by selecting it from the “Model” drop-down menu within the app, retaining control over their experience by opting out whenever they choose.

In addition to personalization, Google is expanding Gemini’s integration with other services such as Calendar, Notes, Tasks, and Photos. This enhancement will enable Gemini to handle intricate queries that span multiple Google applications. For example, a user could request a recipe from YouTube, add ingredients to a shopping list, and locate the nearest grocery store—all in one seamless interaction.

Furthermore, Google is enhancing the connection between Gemini and the Photos app, allowing users to ask questions based on their images. Another exciting development is the rollout of Gemini’s “Gems,” which enables users to create their personalized AI experiences at no cost.

Deep Research & Gemini

In addition to these updates, Google is enhancing the Deep Research feature, originally designed to help users explore complex subjects through easily digestible reports. The integration of the 2.0 Flash Thinking Experimental model will provide enhanced functionality across all stages of research—planning, searching, reasoning, analyzing, and reporting.

This upgrade will allow users to gain deeper insights into Gemini’s thought processes as it works to fulfill queries. Users will be able to observe Gemini’s web searches in real-time, along with how it compiles information for their review. To celebrate this enhancement, Deep Research will be available to all users globally through the Model drop-down menu in Gemini. However, access will be limited to a few trials each month at no cost, while Gemini Advanced users will enjoy expanded access to streamline their complex projects even further.