Copilot+ PCs have made a significant leap in the realm of artificial intelligence by becoming the first personal computers to run Small Language Models (SLM) directly on-device. This innovation allows these AI models to harness local processing power, eliminating the need for cloud reliance, a common feature in platforms like ChatGPT. The result is a more responsive experience, as users can expect quicker interactions compared to their cloud-based counterparts.

In tandem with this advancement, Microsoft has unveiled the AI Dev Gallery, a platform designed to simplify the integration of on-device AI features into applications. This gallery serves as a resource for developers eager to experiment with various models, offering over 25 samples that can be easily downloaded and executed on Windows 10. Additionally, developers can seamlessly export project files or source code directly into their applications, facilitating immediate implementation.

The AI Dev Gallery is compatible with both Windows 10 and 11, supporting x64 and ARM64 architectures. In an interesting development, Windows Latest took the initiative to clone the AI Dev Gallery from its GitHub repository, creating their own version of the application.

Currently, accessing the AI Dev Gallery requires building the project in Visual Studio, necessitating a minimum of 20GB of storage and a multi-core CPU. While a GPU with 8GB VRAM is recommended for handling heavier models, it is not mandatory for lighter applications. Our initial setup involved a 4-core CPU and 4GB of RAM on a Windows 11 machine. The app features two operational modes: Sample and Models, allowing users to explore the various available models effectively.

Testing the models

When it comes to testing, models designed for image and video generation typically require around 5GB of bandwidth for download and installation. To get a grasp on functionality, we opted for a smaller model focused on image upscaling, which was less than 100MB in size. After taking a screenshot, we attempted to upscale the image using the CPU, with the option to switch to GPU processing if desired.

The upscaling process was completed in under 30 seconds, even on a modest virtual machine, with RAM usage peaking at 1GB. The resulting image boasted a resolution of 9272×4900. However, the 100MB upscale model did present some challenges; while it utilized local resources efficiently, the clarity of certain elements, particularly text, suffered significantly, rendering it nearly unreadable.

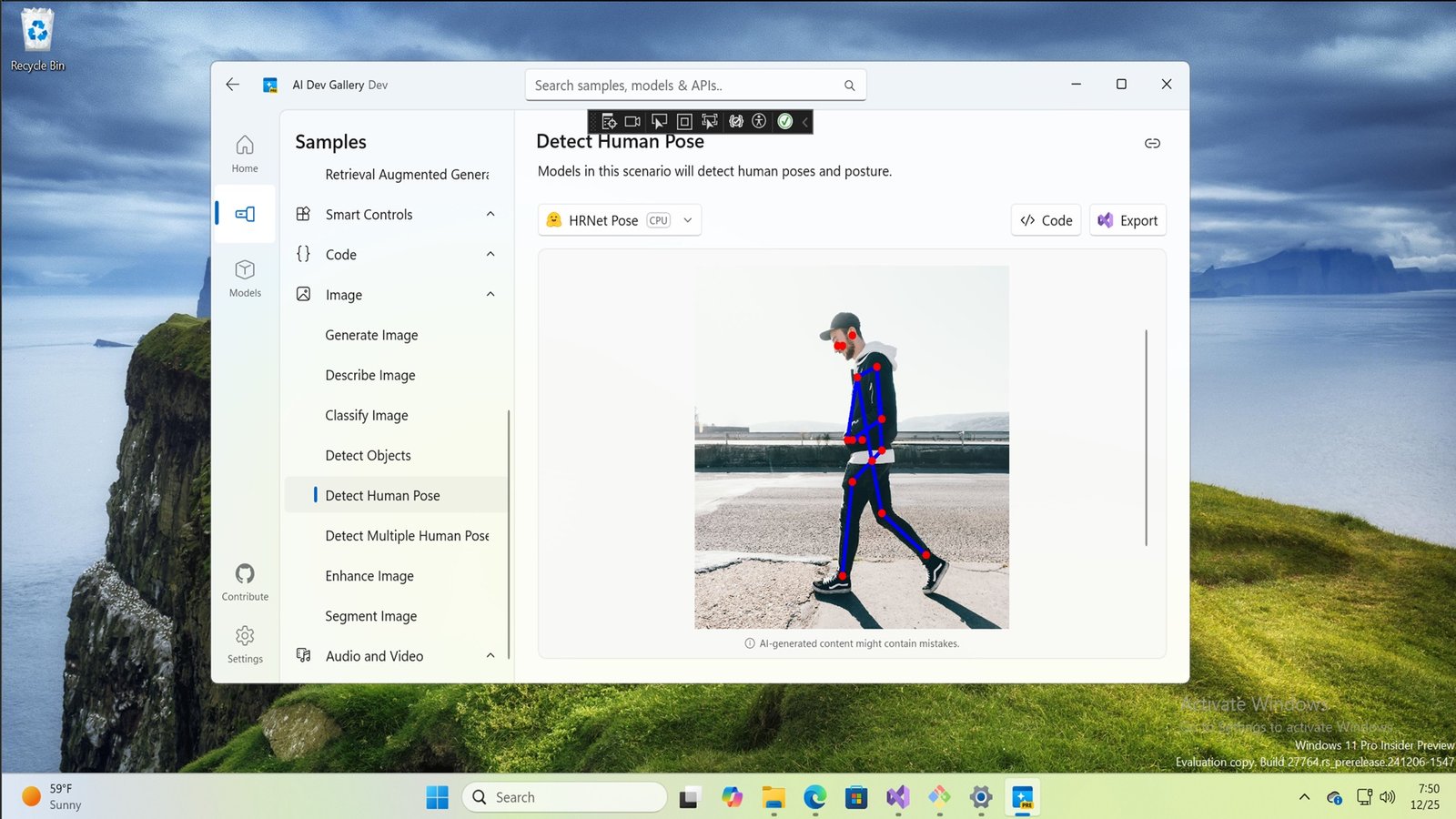

Moreover, the application lacks a feature for previewing the generated image in a larger window or full-screen mode, and there is no straightforward option to download the output directly to disk. We also experimented with a model named Detect Human Pose, which successfully identified a person’s position within an image. Interestingly, it even marked positions on screenshots of our desktop, demonstrating its versatility.

While the integration of these models into applications remains somewhat unclear, many features can indeed be executed locally. However, it is essential to note that PCs will require substantial storage capacity and robust CPUs, ideally with 16GB or more RAM, to accommodate these models effectively. The question arises: is it worthwhile to download a 5GB model for converting a text prompt into an image, or would it be more efficient to wait 30 seconds for a web-based solution? Clearly, many of these features cater to niche use cases and specific implementation environments, rather than appealing to the broader Windows 11 user base.