We are thrilled to unveil Mu, our latest on-device small language model, specifically crafted to tackle complex input-output relationships with remarkable efficiency. Designed to run locally, Mu delivers high performance, particularly in the context of the agent in Settings, which is currently accessible to Windows Insiders in the Dev Channel on Copilot+ PCs. This innovative model translates natural language queries into actionable Settings function calls, enhancing user experience significantly.

Model training Mu

The development of Mu was informed by our experiences with Phi Silica, allowing us to glean critical insights into optimizing models for performance and efficiency on Neural Processing Units (NPUs). Mu is a micro-sized, task-specific language model, meticulously engineered to operate seamlessly on NPUs and edge devices.

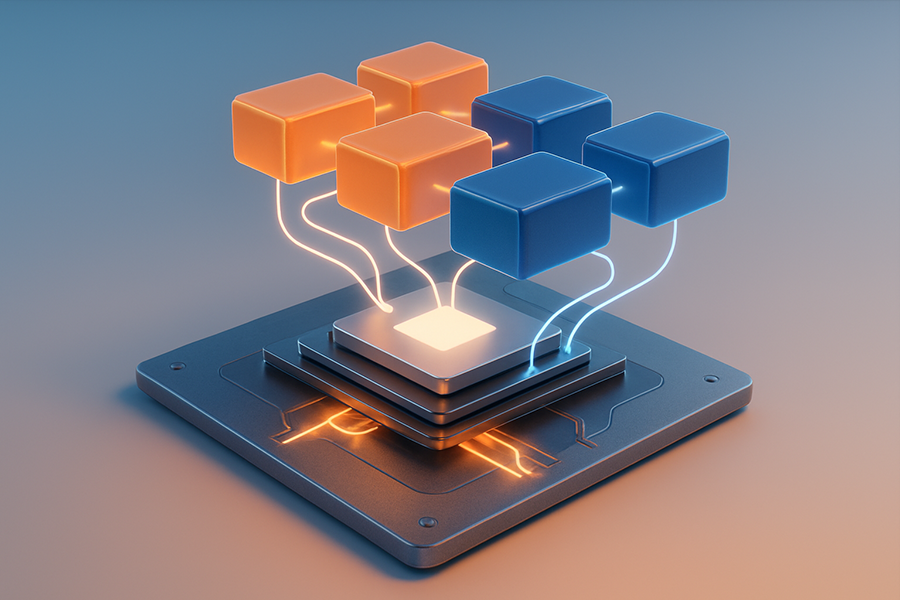

At its core, Mu is a 330M encoder-decoder language model, optimized for small-scale deployment on the NPUs of Copilot+ PCs. Utilizing a transformer encoder-decoder architecture, it first converts input into a fixed-length latent representation through an encoder, followed by the decoder generating output tokens based on that representation. This architecture offers substantial efficiency advantages, as illustrated in the accompanying figure, which highlights how an encoder-decoder reuses the latent representation of the input, unlike a decoder-only model that must consider the entire input-output sequence.

In practical terms, this translates to reduced latency and increased throughput on specialized hardware. For instance, on a Qualcomm Hexagon NPU, Mu’s encoder-decoder approach achieved approximately 47% lower first-token latency and 4.7× higher decoding speed compared to a similarly sized decoder-only model, making it ideal for real-time applications.

Mu’s design was fine-tuned to align with the constraints and capabilities of NPUs, adjusting model architecture and parameter shapes to maximize efficiency. We strategically selected layer dimensions that correspond with the NPU’s preferred tensor sizes, ensuring peak performance during matrix multiplications and other operations. Additionally, we optimized the distribution of parameters between the encoder and decoder, favoring a 2/3–1/3 split to enhance performance per parameter.

Incorporating weight sharing in certain components further reduced the total parameter count, tying input token embeddings and output embeddings together. This not only conserves memory but also enhances consistency between encoding and decoding vocabularies. Moreover, Mu restricts its operations to NPU-optimized operators, fully leveraging the acceleration capabilities of the hardware.

Packing performance in a tenth the size

Mu introduces three pivotal transformer upgrades that enhance performance while maintaining a compact size:

- Dual LayerNorm (pre- and post-LN) – This technique normalizes activations both before and after each sub-layer, stabilizing training with minimal overhead.

- Rotary Positional Embeddings (RoPE) – By embedding relative positions directly in attention, this method improves long-context reasoning and allows for seamless extrapolation to longer sequences than those encountered during training.

- Grouped-Query Attention (GQA) – This approach shares keys and values across head groups, significantly reducing attention parameters and memory usage while preserving head diversity, thus lowering latency and power consumption on NPUs.

We employed advanced training techniques, including warmup-stable-decay schedules and the Muon optimizer, to refine Mu’s performance further. The result is a model that achieves impressive accuracy and rapid inference within the constraints of edge devices.

Mu’s training utilized A100 GPUs on Azure Machine Learning, progressing through several phases. Initially, we pre-trained the model on hundreds of billions of high-quality educational tokens to grasp language syntax, grammar, semantics, and general knowledge. Subsequently, we distilled knowledge from Microsoft’s Phi models, enhancing Mu’s parameter efficiency. This foundational model is versatile for various tasks, and with task-specific data and additional fine-tuning through low-rank adaptation (LoRA) methods, its performance can be significantly amplified.

We assessed Mu’s accuracy by fine-tuning it on several tasks, including SQUAD, CodeXGlue, and the Windows Settings agent. Remarkably, despite its compact size, Mu demonstrated exceptional performance across these tasks.

| Task Model | Fine-tuned Mu | Fine-tuned Phi |

| SQUAD | 0.692 | 0.846 |

| CodeXGlue | 0.934 | 0.930 |

| Settings Agent | 0.738 | 0.815 |

Model quantization and model optimization

To ensure Mu operates efficiently on-device, we implemented advanced model quantization techniques tailored for NPUs on Copilot+ PCs. Utilizing Post-Training Quantization (PTQ), we converted model weights and activations from floating-point to integer representations, primarily 8-bit and 16-bit. This process allowed us to quantize a fully trained model without necessitating retraining, thereby accelerating our deployment timeline while optimizing performance on Copilot+ devices.

Our collaboration with silicon partners, including AMD, Intel, and Qualcomm, was instrumental in ensuring that the quantized operations running Mu were fully optimized for the target NPUs. This involved tuning mathematical operators and aligning with hardware-specific execution patterns, resulting in highly efficient inferences on edge devices, achieving outputs exceeding 200 tokens per second on a Surface Laptop 7.

Model tuning the agent in Settings

In our quest to enhance Windows’ user experience, we focused on simplifying the management of hundreds of system settings. Our objective was to develop an AI-powered agent within Settings that comprehends natural language and modifies relevant undoable settings effortlessly. Integrating this agent into the existing search box required ultra-low latency to accommodate a myriad of possible settings.

While baseline Mu performed admirably, it experienced a 2x precision drop without fine-tuning. To bridge this gap, we expanded our training dataset to 3.6 million samples and included hundreds of settings. Employing synthetic approaches for automated labeling, prompt tuning with metadata, diverse phrasing, noise injection, and smart sampling, we successfully fine-tuned Mu for the Settings Agent, achieving response times under 500 milliseconds.

To effectively handle short and ambiguous user queries, we curated a diverse evaluation set that combined real user inputs, synthetic queries, and common settings. This ensured the model could adeptly manage a wide range of scenarios. We found that multi-word queries, which conveyed clear intent, yielded the best results, while shorter queries often lacked sufficient context. To address this, the agent in Settings is integrated into the search box, allowing short queries to surface lexical and semantic results while enabling multi-word queries to trigger high-precision actionable responses.

Managing the extensive array of Windows settings posed its own challenges, particularly with overlapping functionalities. For example, a simple query like “Increase brightness” could pertain to multiple settings changes. To tackle this complexity, we refined our training data to prioritize the most frequently used settings, continuously enhancing the experience for more intricate tasks.

We look forward to receiving feedback from users in the Windows Insiders program as we refine the experience of the agent in Settings, showcasing the collaborative efforts of our Applied Science Group and partner teams that made this innovation possible.